Back in 2014 I wrote about the custom occlusion system we built for DEAD SECRET. It’s a pretty simple system that works by knowing all of the places the player can stand ahead of time. It allowed us to cut our draw call count way down and ship high-fidelity mobile VR nearly two years ago. But DEAD SECRET CIRCLE, the sequel to DEAD SECRET that we announced last month (check the teaser!), has a lot of new requirements. One major change is the ability to move around the environment freely, which the DEAD SECRET system didn’t support. We needed a new way to manage occlusion for this title.

First we tried to leverage Unity’s built-in occlusion system, which is based on Umbra, an industry-standard tool that’s been around for over a decade. But Unity’s interface to this tool is exceptionally restricted, with very few controls available. The values that are exposed are hard to understand in terms of world units (the internet theorizes that the scale value is off by a factor of 10), and in some cases the documentation Unity provides is misleading and/or false. While Unity’s built-in culling does work (very well!) in some cases, it is hard to understand why it fails in others. The debug visualization adds to the confusion: when a “view line” passes straight through a wall, are we supposed to believe that the debug view is inaccurate, or the occlusion has broken, or that this is the way it’s “supposed” to work? After about six months of trying to get by with Unity’s occlusion system, I gave up and decided to revisit the custom tech we wrote for DEAD SECRET.

Robot Invader’s internal technology stack is called KARLOFF, and it has had a hand in every game we’ve shipped since our first in 2011. The great thing about a mature technology stack is that we can build new tools very quickly. Using existing KARLOFF tech, we built a new version of our occlusion system in less than a week.

Robot Invader’s internal technology stack is called KARLOFF, and it has had a hand in every game we’ve shipped since our first in 2011. The great thing about a mature technology stack is that we can build new tools very quickly. Using existing KARLOFF tech, we built a new version of our occlusion system in less than a week.

Our new system is still based on rendering panoramas and color-coding geometry to find potentially visible geometry sets from a given point in space. But for a world in which the camera can move freely, we need a lot more points. We also need a way to map the current camera position to a set of visible geometry. All of a sudden this system goes from being a simple occlusion calculation to computing a full-blown potentially visible set.

My goal is always to trade build time and runtime memory (both of which we have plenty of) for runtime performance (which we are always constrained by). Therefore this system uses a 3D grid (as opposed to a BSP or kd-tree, which are common for this purpose) that can index into a set of visible geometry in O(1) at runtime. The world is cut up into grid cells and a panorama is rendered for each. The resulting geometry information is stored back to the grid and then looked up as the camera moves through the scene at runtime. Simple, right?

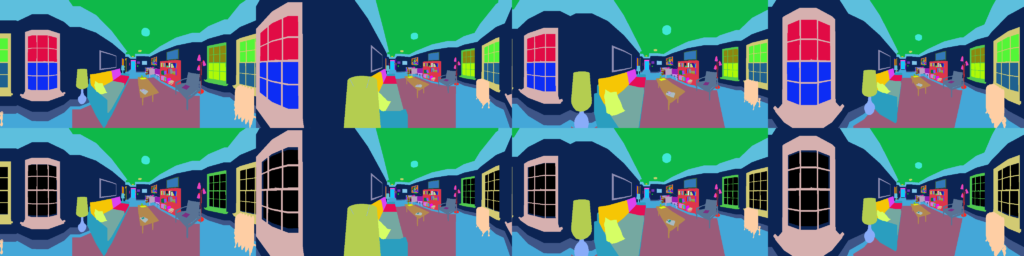

Well, the devil is in the details. There’s a trade-off between cell size, accuracy, and bake time. Very small cells (say, 0.3 meters per side) result in highly accurate occlusion but take a long time to bake. For DEAD SECRET CIRCLE our primary goal is to cut entire rooms and building floors that are on the other side of a wall away, not to occlude small objects within the frustum. We can get away with a larger cell if we render occlusion from several points within the cell and then union the results into a single set. We actually need to do two passes, one with transparent geometry hidden and the other with it opaque (in order to catch both the objects behind a transparent surface and the surface itself). Here’s an example of these two passes rendered from four different points within a cell.

The output of this boils down to a bunch of lists of MeshRenderer pointers that get enabled and disabled as sets are selected. It’s also necessary to do another set of renders for every occlusion portal (e.g. a door that can be opened or closed) so that we can adjust the visibility of the objects on the other side when the portal is opened at runtime. At this point we have a fully functioning, highly accurate occlusion system that is nearly free at runtime.

But there’s a catch: this method relies on walking a texture like the one above and picking colors to match to mesh. At 1024×512 per panorama (which seems to be the minimum resolution we can get away with based on the size of our objects in the world), a full transparent / nontransparent pass from four points results in a 4096×1024 image. With a 1x1x1 cell size we end up with about 450 cells for this small apartment level, which is 1,426,063,360 pixel compares. Add in more passes for portals and this time starts to grow exponentially. Plus, 1x1x1 might not be small enough for perfect accuracy–we get better results with a 0.5 unit square cube, which on this level takes nearly 20 minutes to compute. I know I just wrote that I was willing to trade build time for runtime performance, but 20 minutes to compute occlusion is unreasonable.

There are probably some smart ways to tighten the algorithm itself up. I managed to achieve a 5x speedup by optimizing just the inner pixel compare loop. But part of the problem here is the design: the cell sizes are of fixed size and sometimes intersect with walls. Cells have to be fairly small to prevent objects from the other side of a wall from being pulled into the set. Plus levels that aren’t rectangular in shape end up with a lot of cells in dead areas the camera will never go, rendered for no reason.

The next iteration of this system is to stop blindly mapping the entire level and instead restrict cells to hand-authored volumes. Unity even provides a useful interface for this with the fairly mysterious Occlusion Area. Exactly how it works with Umbra is the topic of some debate (compare Intel’s documentation about the use of Occlusion Areas to Unity’s own), but for our purposes we’re just using it as a way to size axis-aligned volumes in the world. Each Occlusion Area produces an “island” of cells, and when within an island the camera can still find its cell in O(1). Occlusion Islands don’t need to be the same resolution. In fact, the cells don’t even need to be cubes any longer. We can expose controls to control the granularity of the world per axis, resulting in rectangular volumes. Why compute extra cells near the ceiling if the camera isn’t ever going to go up there?

So now we have something that looks like a real occlusion system. It handles transparent objects, occlusion portals, and can be easily controlled on a per-area basis by breaking the world up into islands of culling information. By tuning the granularity per island the apartment area above went from 20 minutes to bake down to a very reasonable 1.5 minutes. This system can still be improved, and it doesn’t solve for dynamic objects at all, but already we’re achieving better results than we were able to after months of twiddling with Unity’s built in occlusion system.

DEAD SECRET CIRCLE is scheduled to come out this year. Follow us on twitter for more information, or sign up to the mailing list to get updates as they come out.