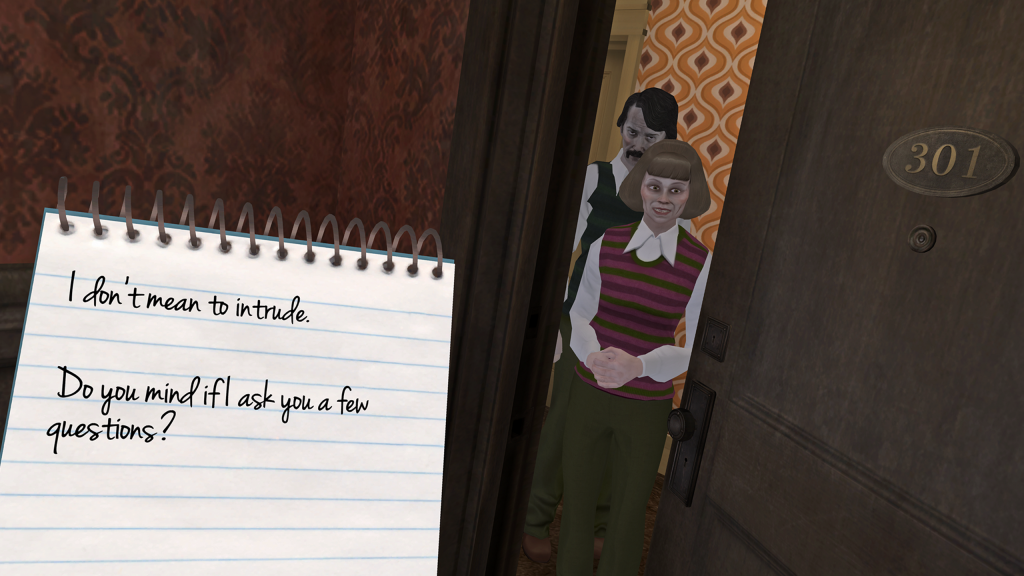

One of the new systems we’ve built for DEAD SECRET CIRCLE is a branching dialog system. As you explore the world you periodically interview suspects, and depending on your choices the conversation can go many different ways.

Branching dialog systems are pretty common, and ours is not particularly exotic. But even in the implementation of a straightforward branching conversation system can be fairly complicated. For our system, I was interested in finding or building tools that would help me explore the flow of the dialog, and its various branches, in real-time as I was editing. For me, the hardest part of this system is actually writing the dialog itself, so I needed a toolchain that would let me quickly edit and revise.

Building a Dialog System

Building a Dialog System

There are a number of tools available for creating branching dialog trees. I looked at Yarn, a Twine-like editor built for Night In the Woods. Chat Mapper is a very serious–and very complicated-looking–tool that has an order of magnitude more features than I need. I even realized that I wrote a markup syntax for branching dialog a decade ago that I’ve never used for anything. Though there are a lot of tools out there, it was hard to find something that matched my needs and powerful enough to justify not writing something myself.

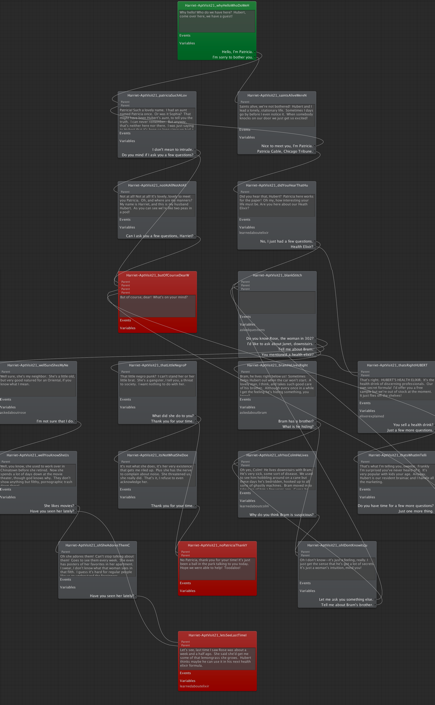

In the end I went with Inklewriter, a web-based tool that allows you to quickly lay out (and play through) branching dialog trees. It was written as a Twine competitor, I think, but the feature set was just the right fit for DEAD SECRET CIRCLE. It supports named variables, conditional branches, divert nodes (where dialog flow is diverted to another node in the middle of playback), and can output json. The interface is simple but powerful, and I can share fully playable dialog sequences with others before we push it into the game. Overall, it’s a smart tool made by developers who’ve done a lot of interactive storytelling themselves.

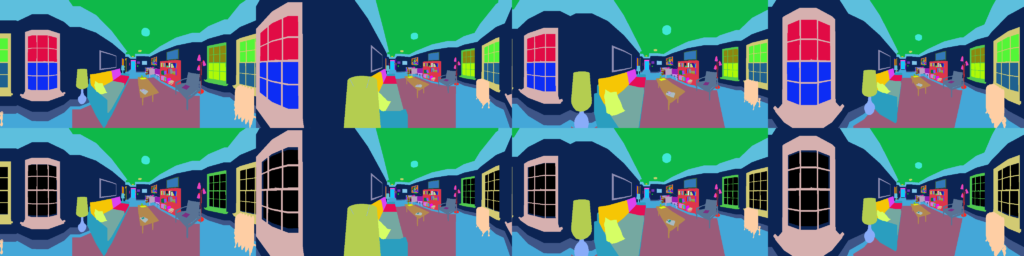

The next step was to write a Unity importer for Inklewriter’s output json. Inkle Studios, the authors of Inklewriter, actually supply their own Unity plugin for Ink, their (much more powerful, and complex) interactive novel language, but I needed to roll my own to use the simpler Inklewriter output. I did this by creating a custom AssetPostprocessor for text files that looks for json files and parses them. The parser itself is straightforward–mostly just a translation of the json node hierarchy to a similar ScriptableObject graph which is written to disk as an asset file. It also pulls all the strings out and puts them into a separate dictionary system, which provides key/value pairs for all strings in the game and is our main infrastructure for localization. My workflow is to simply save Inklewriter’s output json into my Unity Assets folder and then point the runtime dialog system at the auto-generated asset, the root of which is the first node in the conversation. At runtime, a dialog manager “runs” each node by displaying the node’s text, flipping node variables, and presenting the player with response choices, which select transitions to other nodes. Text is pulled from the dictionary, and voice acting samples can also be pulled from another database using the same key. I even made a nifty visualization tool out of Unity’s undocumented graph API.

Writing Branching Dialog

Once the tools and runtime were in order I was faced with the real task, which I had been avoiding: writing the actual dialog. There are so many approaches to branching conversation design that it’s hard to know where to start. I settled early on a fairly standard “call and response” model, in which you ask the NPC a question from a list of options and they give you an answer, because it mapped well to the story frame of interviewing suspects. But where to go from there? Should I encode a subtle moral choice into each question a la Mass Effect? Should I provide two plausible options and two joke options a la Disaster Report (I call this the “DMV Written Test Design”)? Should I allow the player chances to ask questions more than once, or should should choosing an option automatically close other options off (a la many games, but the best example is Firewatch)? Should the player’s question choices inform the personality of their player character? The design of the conversation itself was much more daunting than the code to run it.

The main reason to h

ave a branching dialog system to begin with is to deliver information to the player in a way that gives her some agency and (hopefully) engenders some empathy for the NPC. Some information is critical, and I can’t allow the player to miss it by making bad dialog choices. Other information is optional, available to the players who choose to delve in further, who ask the game for more detail. The DEAD

SECRET series is generally built upon a philosophy of narrative levels-of-detail: some folks will simply skim across the surface while others will choose to dive deep, and both should have a good time. I wanted the dialog system to be the same.

In the end I settled on a model in which the player needs to make decisions, but there are no bad choices. Once the player has chosen a question to ask, the conversation shifts in that direction, and (usually) does not return. The player must choose which topics to broach, which bits they want to hone in on, but none of the choices are wrong or bad. They just cause different tidbits of information to be revealed, and no matter what path is taken I can ensure that the critical pieces of information are displayed.

Inklewriter’s toolset gave me enough power to author conversations with a lot of structural variance. Some conversations loop (allowing several chances to ask the same question), others are nearly linear. Some conversations result in significantly different revelations, others end up at the same place via different paths through the tree. The structure is fairly free-form, which I like. My goal is to make it feel as little like a mechanism to be reverse-engineered as possible.

Discoveries

I have never written branching dialog before, and I learned a lot in the process of writing for DEAD SECRET CIRCLE. This stuff is probably old hat for folks that have built these types of systems before, but it was new to me.

The biggest realization I had was that I could communicate the protagonist’s personality to the player through her questions. Communicating the protagonist’s feelings is a constant struggle for me. She has very few opportunities to talk about herself or what she is thinking. Her main mode of communication is commentary on things that the player examines in the game world, but these messages must be succinct and to-the-point. There’s not a lot of time for introspection. Figuring out that I could hint at her thought process by writing questions in a certain way was a revelation for me.

I also learned how important it is to record dialog early, long before there are voice actors working on the project. Jonny and his wife Shannon recorded all of the dialog in the game themselves, which let us test all kinds of critical systems like spatialized VO and lip synching. But most importantly, it made it very obvious when a conversation made no sense. Reading it out loud, with all the pauses and inflections and imperfect pronunciations that are normal to human speech, clearly separated the text that sounded natural from the text that did not. By the time we did get voice acting done, we already knew what we wanted from nearly every line because we’d had placeholder audio in the game for months.

Speaking of voice acting, I also learned how to make life really hard for the men and women who lent their voices to my characters. Forcing them to say foreign words in languages they don’t speak was one mistake. Relying on hard-to-say-out-loud technical words (like “ideomotor”) cost us some takes. I briefly panicked when I realized I’d written a character with an accent that I couldn’t verify the accuracy of myself. Fortunately we were lucky enough to work with seasoned pros who got through the minefield of my dialog text without losing limbs. But next time I’ll try to remember that actual humans have to perform the words I write out loud.

And… Scene

The branching dialog system in DEAD SECRET CIRCLE was one of the most enjoyable parts of the project for me. I liked building the system to run it and writing the dialog itself, and I learned a bunch in the process. The original DEAD SECRET was a bit lonesome–there’s nobody in that house but you and the killer–and allowing direct interaction with a wider cast of characters was one of our core design goals for CIRCLE. The dialog system (and related character animation and lip sync systems, which I’ll write about another time) ended up doing nearly all the heavy lifting here, and I’m really happy with the result.

DEAD SECRET CIRCLE comes out pretty soon for both VR and traditional platforms. There’s a Steam page up if you are interested, and a mailing list you can join if you’d like to get updates about the game.

Robot Invader’s internal technology stack is called KARLOFF, and it has had a hand in every game we’ve shipped since our first in 2011. The great thing about a mature technology stack is that we can build new tools very quickly. Using existing KARLOFF tech, we built a new version of our occlusion system in less than a week.

Robot Invader’s internal technology stack is called KARLOFF, and it has had a hand in every game we’ve shipped since our first in 2011. The great thing about a mature technology stack is that we can build new tools very quickly. Using existing KARLOFF tech, we built a new version of our occlusion system in less than a week.